Just when you think you’re getting a handle on things…

So this week’s big announcement was the (still being rolled out) ability to create custom GPTs. Just as I was getting to grips with doing this in the OpenAI playground, it’s now completely WYSIWYG (for many) from within ChatGPT which has had a 8 bit to 16 bit type upgrade in graphics to boot. As much as I want to encourage use of Bing Chat for a bunch of institutional reasons, I am yet again pulled back to ChatGPT with the promise of custom GPTs (do we have to call them that?) After a few false starts yesterday, due to issues of some sort with the roll out I imagine, today it has been seamless and smooth. I learnt quickly that you can get real precision from configuring the instructions. For example, I have given mine the specific instruction to share link X in instance Y and Link A in instance B. To create the foundation I have combined links with uploaded documents and so far my outputs have been pretty good. I think I will need longer and much more precise instructions as the responses do veer to the general still a little too much but it is feeding from my foundation well. Here’s how it looks in the creation screen:

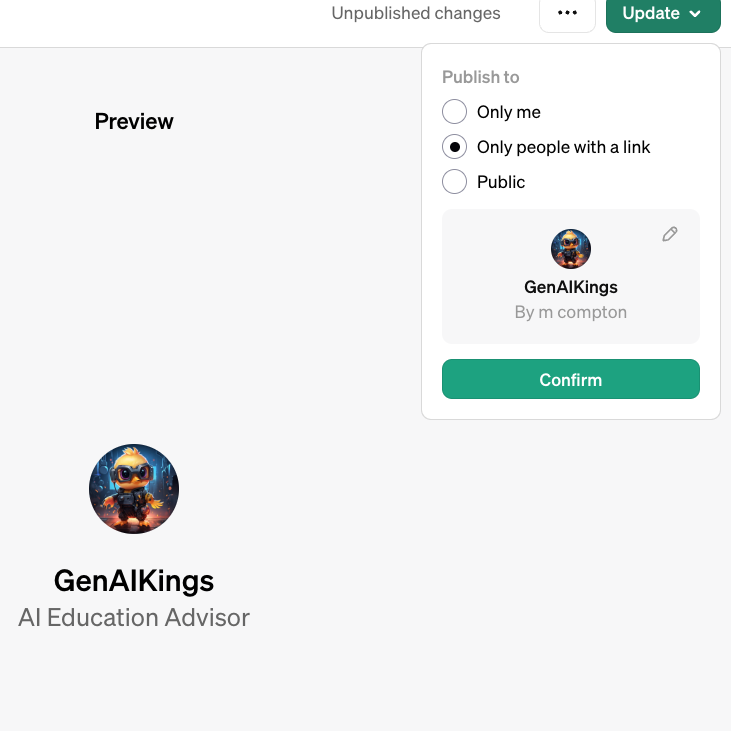

It comes with a testing space adjacent to the creation window and options to share:

And this is the screen you get if you access the link (but recipients themselves must have the new creation ability to access custom bots):

And finally the chatbot window is familair and, as can been seen focussed on my data:

I actually think this will re-revolutionise how we think about large language models in particular and will ultimately impact workflows for professional service and academic staff as well as students in equal measure.