I’m reading Leopold Aschenbrenner’s extended collection of essays Situational Awareness:

The Decade Ahead (June 2024). It made me think about so many things and so I thought I’d start the academic year on my blog with a breathless brain dump. You never know, I might need one for every chapter! In the first chapter Aschenbrenner extrapolates from several examples predictions about AI capabilities in the near future stating: “I make the following claim: it is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer.”

The (heavily caveated!) prediction of near future AGI and replacement of cognitive jobs within a timeframe that doesn’t even see me to retirement is bold but definitely not set out in a terminator / tin foil hat way either. The strucutred and systematic approach including pushing us to engage with our reactions at stages in the very recent past (‘this is what impressed us in 2019!’) it is hard not to be convinced by the extrapolations. For a relative lay person like me the trajectory from GPT-2 to GPT-4 has indeed been jaw-dropping and I definitely feel the described amazement at how we (humans) so quickly normalise things that dropped our jaws so recently. But extrapolating this progress linearly still seems improbable to my simple brain (as if to prove this ‘simple’ assertion to myself I accidentally typed ‘brian’ three times before hitting on the correct spelling). The challenges of data availability and quality, algorithmic breakthroughs and hardware limitations acknowledged in the chapter are not trivial hurdles to overcome though, as I understand it, but this first chapter seems to promise me a challenge to my thinking. Neither are the scaling issues and relative money and environmental costs which must be the top priority whichever lens on capitalism you are looking through.

That being said, the potential for AI to reach or exceed PhD-level expertise in many fields by 2027 is sobering, though I remain sceptical about the ways in which each new iteration ‘achieves’ the benchmarks: much of the ‘achievement’ masks the very real and essential human interventions but then compares human and AI ability realtively in an apples versus oranges way without acknowledging those essential leg ups. It reminds me a bit of some of the controversies around what merits celebration of achievement in this year’s Olympics: ‘acceptable’ and ‘unacceptable’ levels of privilige, augmentation, diets, birth differences and so on are largely masked and set aside behind narratives of wonder until someone with an agenda picks on something as if to reveal as a surprise that Olympic athletes are actually very different from the vast majority of us (some breakdancers nothwithstanding).

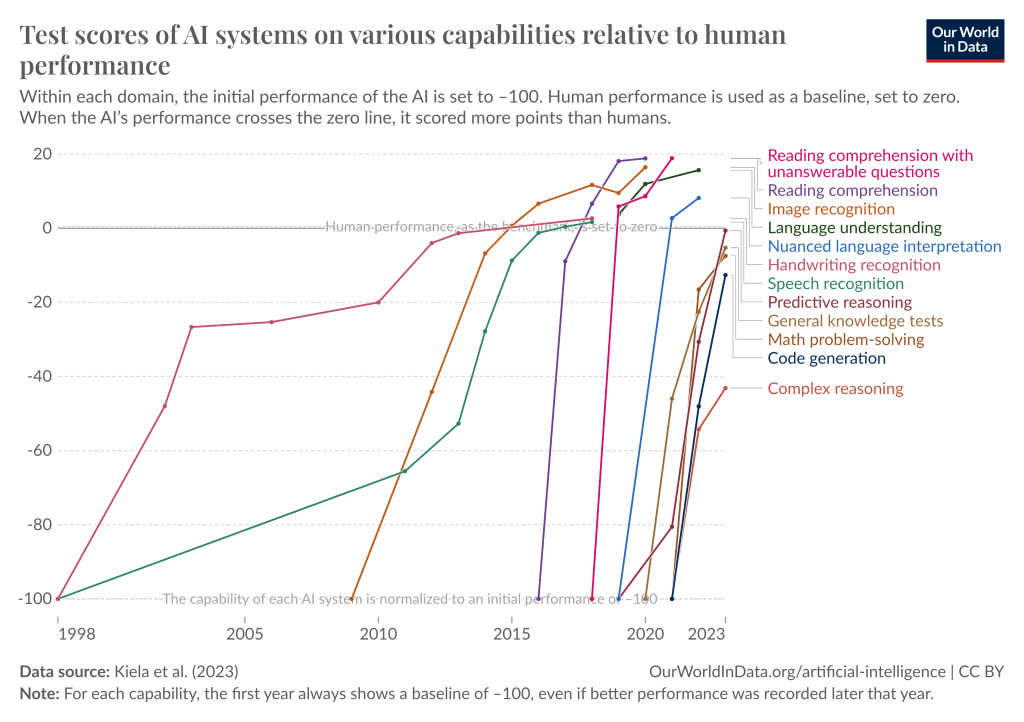

If the near future cognitive performance predictions are realised, this will have profound implications for higher education and the job market. The current tinkering around the edges as we blunder towards prudent and risk averse change may seem quaint much sooner than many imagine and it definitely keeps me awake at night tbh. So, yes, human intelligence encompasses more than just information processing and recall, but we shouldn’t ignore the success against benchmarks that exist irrespective of any frailty in them in terms of design or efficacy. Aschenbrenner says one lesson from the last decade is that we shouldn’t bet against deep learning. We can certainly see how ‘AI can’t do x’ narratives so often and so swiftly make fools of us. Aschenbrenner shares in that first chapter this image from ‘Our World in Data’ which has a dynamic/ editable version. The data comes from Kiela et al (2023) who acknoweldge benchmark ‘bottlenecking’ is a hindrance to progress but that significant improvements are in train.

Look at the abilities in image recognition for example. Based on some books and papers I read from the late 2010s and early 2020s I get the sense that even within much of the AI community the abilities of AI systems in that domain will have come as a big surprise. By way of illustration here is my alt text for the image above which I share here unedited from ChatGPT:

A graph showing the test scores of AI systems on various capabilities relative to human performance. The graph tracks multiple AI capabilities over time, with human performance set as a baseline at 0. The AI capabilities include reading comprehension, image recognition, language understanding, speech recognition, and others. As the lines cross the zero line, it indicates that AI systems have surpassed human performance in those specific areas.

I asked for a 50 word overview suitable for alt text and not only does it save me labour (without, I should add, diminishing the cognitive labour necessary to get my head around what I am looking at) it also tells me there’s no excuse not to alt text things now we can streamline workflows with tools that can support me in this very way.

The nuanced aspects of creativity, emotional intelligence and contextual understanding may prove to be more challenging for AI to replicate meaningfully but even there we are being challenged to define what is human about human cognition and emotion and art and creativity. As educators, the challenges are huge. Even if the the extrapolations are only 10% right this connotes disruption like never before. For me, in the short term, it suggests we need to double down on the endeavours many of us have been pushing in terms of redefining what an education means at UG and PG level, what is valuable and how we augment what we do and how we learn with AI. We can’t ignore it, that’s for sure, whether we wish to or not. We should be preparing our students for a world where AI is a powerful tool, so that we can avert drifts towards dangerous replacement for human cognition and decision-making at the very least.

Kiela, D., Thrush, T., Ethayarajh, K., & Singh, A. (2023) ‘Plotting Progress in AI’, Contextual AI Blog. Available at: https://contextual.ai/blog/plotting-progress (Accessed: 20 Aug 2024).