I’m sorting through all my files as I prep to hand over my role and start my new job and came across this discussion activity I designed but have yet to run. It’s in 3 parts with each part designed to be ‘released’ after the discussion of the previous part. In this way it could work synchronously and asynchronously.

Part : AI, cheating and misuse

AI-related misconduct in HE is far more complex than simple notions of “cheating”. In fact, how we define cheating (both institutionally and individually) is worth revisiting too. Recent prominent news stories (examples at end) reveal sharp inconsistencies in how universities define, detect and sanction what is seen as inappropriate AI use, despite there being, at best, only emergent policy and certainly wide ranging understandings and interpretations of what is acceptable. Some ban all AI; others permit limited use with disclosure. Cases show students wrongly accused, rules unevenly applied and anxiety rising across the sector amongst both staff and students.

Key points

- Huge variation exists between and within institutions: one university may expel a student for Grammarly use, another may allow it.

- Detector-driven accusations often fail under appeal because AI scores are not proof. Policies lag behind practice: vague guidance leaves staff and students uncertain what constitutes fair or inappropriate use.

- False positives where detectors are used disproportionately affect neurodivergent and non-native English writers whose linguistic patterns deviate from ‘naturalisitc’ norms/ expectations/ markers.

- Misconduct procedures must ensure the provider proves wrongdoing; suspicion is insufficient.

- I would argue that assignments with fabricated or ‘hallucinated’ references should automatically fail though this does not seem to be consistent, even where such examples are used as markers of inappropriate AI use.

Initial discussion prompts

- Is ‘AI misconduct’ a meaningful category or an unhelpful new label for old issues? How else might we provide an umbrella term? Perhaps one that is more neutral for assessment policy.

- How do inconsistent institutional rules affect fairness for students across programmes? Where are these inconsistencies? What needs updating/ changing?

- What does it mean for academic judgement when technology, not evidence, drives decisions? Even though we have made a decision at King’s not to use detectors we need to ensure ‘free’ detectors are not used nor are other digital traps or tricks that undermine trust (eg, deliberately false references included in reading; hidden prompt ‘poisoners’ in assessment briefs).

- Do we need to overhaul common understandings (eg in academic integrity policy) of what constitutes cheating, how far 3rd party proof reading can be considered legitimate and whether plagiarism is an adequate umbrella term in age of AI? Is this a good time to rethink what we assume are shared understandings and to consider whether this ideal is actually a mask or illusion?

Part 2: Detecting AI: Not as simple or obvious as we might think

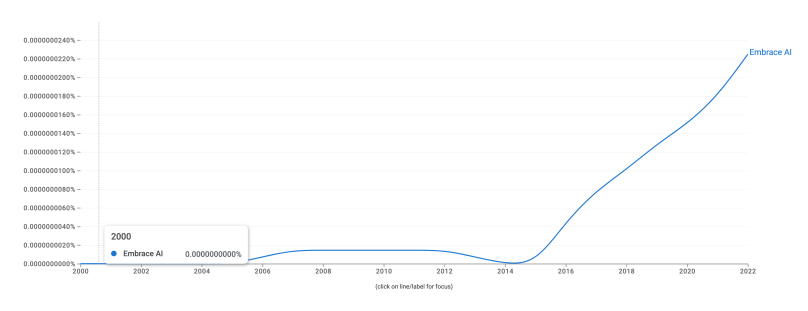

Detection of AI-generated writing either by tech means (using AI to detect AI) or ‘because it feels like AI’ has never been as easy as some make out, and it is increasingly difficult as tools proliferate and improve. While tools claim to identify machine-written work through statistical cues such as ‘perplexity’ and ‘burstiness’, modern large language models are now able to better mimic these fluctuations. Detection confidence often rests on illusion: humans and detectors alike may only be spotting inexpert AI writing. Skilled prompting, ‘humanisers’, and improved models blur the line between human and synthetic text, exposing the fallibility of ‘gut-feeling detection’. Read more here.

Key points

- Early AI detectors relied on predictable signatures in low-temperature outputs; current models vary their linguistic rhythm automatically, making detection increasingly unreliable.

- Human readers are equally fallible: many assumed ‘tells’ (clarity, neatness, tone, specific word choices, punctualtion norms) may simply reflect the style of conscientious, neurodivergent or multilingual students.

- False accusations carry ethical and procedural risks. The Office of the Independent Adjudicator has also reinforced risks related to use of AI detectors.

- King’s guidance (rightly imo) cautions against use of detection tools and emphasises contextual evidence and dialogue. This includes detection by ‘feel’ of writing.

- The sector consensus ( see articles from Jisc, THE and Wonkhe, below) is clear: detectors may inform suspicion but never constitute evidence.

Discussion prompts

- What do you notice first when you think ‘this feels AI-written’? Are there red lines we can all agree are out of bounds? Are the boundaries of acceptable use fluid? Can we have policy for that?

- How could you verify suspicion without breaching trust or due process?

When detection becomes guesswork, what remains of professional judgement?

Part 3. Mitigation strategies

Preventing AI-related misconduct demands more than surveillance; it requires redesign. UK universities (see media reports below) increasingly emphasise prevention through clarity, curriculum and compassionate assessment. The approach promoted by King’s Academy thus far is one that promotes a culture of integrity with the aim of creating a shared understanding, not fear of detection.

Key points

- Clarify rules: define what counts as acceptable AI use in each assignment and require students to declare any assistance. This is what the initial guidance and current roadshows are about but how can we formalise that?

We need to embed AI literacy: teach students how to use AI ethically and critically rather than banning it outright. And staff too of course. - Redesign assessment: prioritise process, originality, and context (drafts, reflections, local or personal data).

- Diversify formats: include vivas, oral presentations, in-person elements, or authentic tasks resistant to outsourcing.

- Support equity: ensure guidance accounts for assistive technologies and language tools legitimately used by disabled or multilingual students.

- Encourage dialogue: normalise discussion of AI use between staff and students rather than treating it as taboo.

Discussion prompts

- What elements of your assessment design could make AI misuse less tempting or effective?

- How might explicit permission to use AI (within limits) enhance transparency and trust?

- Which AI-aware skills do your students most need to learn and how will we accomplish that? Where does the role of policy sit ? where in the academy are the contradictions and tensions?

Further reading

Topinka, R. (2024) ‘The software says my student cheated using AI. They say they’re innocent. Who do I believe?’, The Guardian, 13 February. Available at: https://www.theguardian.com/commentisfree/2024/feb/13/software-student-cheated-combat-ai

Coldwell, W. (2024) ‘‘I received a first but it felt tainted and undeserved’: inside the university AI cheating crisis’, The Guardian, 15 December. Available at: https://www.theguardian.com/technology/2024/dec/15/i-received-a-first-but-it-felt-tainted-and-undeserved-inside-the-university-ai-cheating-crisis

Havergal, C. (2025) ‘Students win plagiarism appeals over generative AI detection tool’, Times Higher Education, 15 July. Available at: https://www.timeshighereducation.com/news/students-win-plagiarism-appeals-over-generative-ai-detection-tool

Dickinson, J. (2025, May 8). “Academic judgement? Now that’s magic.” Wonkhe. Available at: https://wonkhe.com/blogs/academic-judgement-now-thats-magic/

Grove, J. (2024) ‘Student AI cheating cases soar at UK universities’, Times Higher Education, 1 November. Available at: https://www.timeshighereducation.com/news/student-ai-cheating-cases-soar-uk-universities Times Higher Education (THE)

Rowsell, J. (2025) ‘Universities need to “redefine cheating” in age of AI’, Times Higher Education, 27 June. Available at: https://www.timeshighereducation.com/news/universities-need-redefine-cheating-age-ai

Webb, M. (2023, March 17). AI writing detectors – concepts and considerations. National Centre for AI / Jisc. Available at: https://nationalcentreforai.jiscinvolve.org/wp/2023/03/17/ai-writing-detectors/ Artificial intelligence+1