Inspired by something I saw in a meeting yesterday morning, I returned today to Gemini Canvas and Claude equivalent (still not sure what it is called). Both these tools are designed to enable you to “go from a blank slate to dynamic previews to share-worthy creations, in minutes.”

The resource I used was The Renaissance of the Essay? (LSE Impact Blog) and the accompanying Manifesto which Claire Gordon (LSE) and I led on with input from colleagues from LSE and here at King’s. I wondered how easily I could make the manifesto a little more dynamic and interactive. In the first instance I was thinking about activating engagement beyond the scroll and secondly thinking about text inputs and reflections.

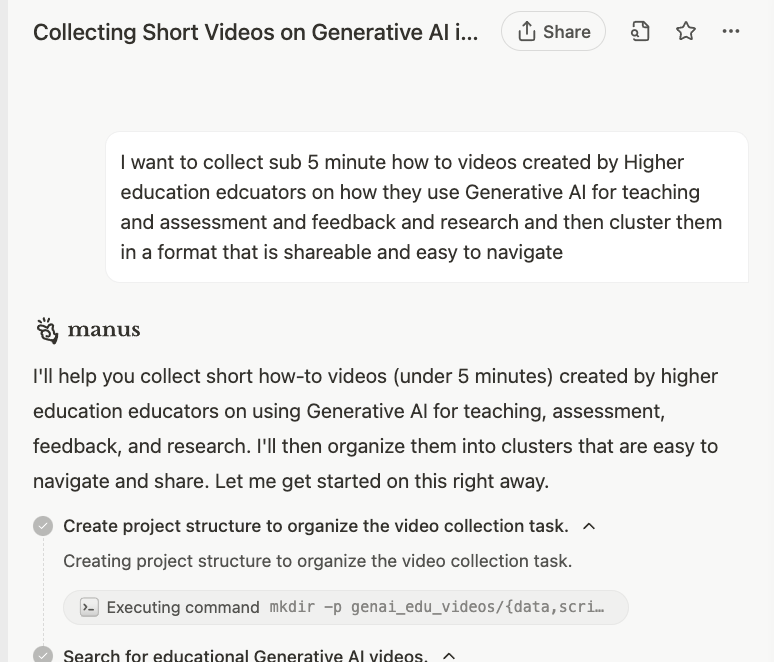

The basic version in Gemini was a 4th-iteration output where after initial very basic prompt:

“turn this into an interactive web-based and shareable resource”

…I tweaked (using natural language) the underpinning code so that the boxes were formatted better for readability and to minimise scrolling and the reflection component went from purely additional text to a clickable pop-up. I need to test with a screen reader to see how that works of course.

I then experimented with adding reflection boxes and an export notes function. It took 3 or 4 tweaks (largely due to copy text function limits in browser) but this is the latest version. Obviously with work this could be made to look nicer but I’m impressed with initial output and ability to iterate and for functionality in very short time (about 15 mins total).

For the Claude one I thought I’d try having all those features including in-text input interaction from the start. Perhaps that was a mistake, because although the intial output looked great, the text input was buggy. 13 iterations later and I got the input fix. However, then the export function that I’d added around version 3 had stopped working so I needed to do a lot more back and forth. In the end I ran out of time (about 40 mins in and at version 19) and settled on this version with the inadequate copy/ paste function.

It’s all still relatively new and what’s weird about the whole thing is the continual release of beta tools, experiemtnal spaces and things that in any other context would not be released to the World. Nevertheless, there is already utility visible here and no doubt they will continue to improve. I sometimes think that my biggest barrier to finding utility is my own limited imagination. I defintiely vibe off seeing what others have done. This further underlines for me the difference and a significant problem we have going forward. ‘Here’s a thing.’ they say. “What’s it for?’ we ask. ‘I dunno,’ they shrug, ‘efficiency?’

‘tech bros shrugging’