I have just spent a week in Egypt and, I suppose unsurprisingly, have returned to find that there have been yet more new AI tools released and important tweaks to existing ones. The things that I have been drawn to are the ‘Smart Slides’ plugin in GPT-4 and the image interpreter in Bing Chat. Before I show examples of my ‘fiddling when I should be working’, the one AI tool I found very useful in Egypt was the Google Lens translation tool. When I did have wifi I used it quite a lot to translate Arabic text as below. We have grown used to easy translation using tools like Google Translate but this really does take things to the next level, especially when dealing with a script you may not be familiar with. We are discussing this week at work the extent to which AI translation might form a legitimate part of the production of assessed work and I think it is going to be quite divisive. I imagine that study in the future will naturally become increasingly translingual and, whilst I acknowledge and understand the underpinning context of studying for degrees in any given linguistic medium, I feel like we may need to address our assumptions about what that connotes in terms of skills and ways students approach study. Key questions will be: If I think and produce in Language 1 and use AI to translate portions of that into Language 2 (which is the degree host language), how much is that a step over an academic integrity line? How much does it differ and matter in different disciplines? Are we in danger of thinking neo-colonially with persisting with insistence of certain levels of linguistic competence (in Global North internationalised degrees)?

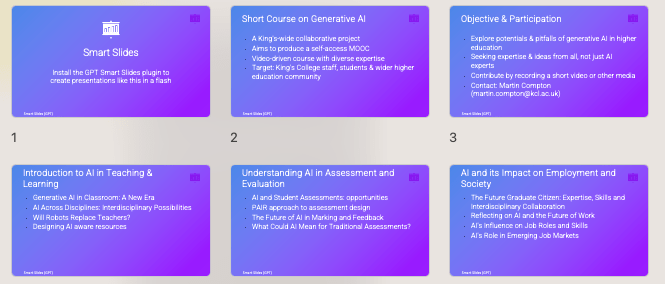

As a Chat GPT ‘plus’ user I have, for my 20 bucks a month, access to GPT-4 and the growing stack of plug ins. I saw on Twitter the ‘Smart Slides’ plug in demoed and thought I’d better give that a whirl. I wanted to see how it comparted to other tools like Tome.app which can instantly produce presentational materials like this and I was blown away by Gamma.app when I first saw it. The generation of pre-formatted, editable slides on a given topic based on a prompt is very impressive the first time you see it but, like the anodyne Chat GPT generated ‘essays’, it’s not the ‘here’s something I made earlier’ that will likely be the most useful, but (in this case) the web-based, intuitive creation and sharing PowerPoint alternative format. This one I generated in seconds to illustrate to a colleague and it reamins un-tweaked.

I have found for my own use that ideation, summarisation and structuring are my go to uses for ChatGPT and all of these could feed creation of a slide deck. Plus, whilst I tend to use tools like Mentimeter to create slide decks, I am not daft enough to think that PowerPoint is still not the Monarch of Monarchs in a sector where content (for better or worse!) remains King.

The ChatGPT Smart Slides plug in works best in my limited experiments if you supply a decent amount of prompt material but also gives a decent starting point when using only a minimal starting prompt. To create the one that follows I used my own pre-authored rationale and suggested structure for a short course on Generative AI, downloaded the output, re-opened it in PowerPoint, changed the design away from the default ugly white text on purple background and then used the in-app design tools to tweak the look and feel but not the content.

It took 5 minutes to turn a document into useable slides so hard to argue with that as a template at least.

The completed slides after a little (PowerPoint-y AI design fiddling)

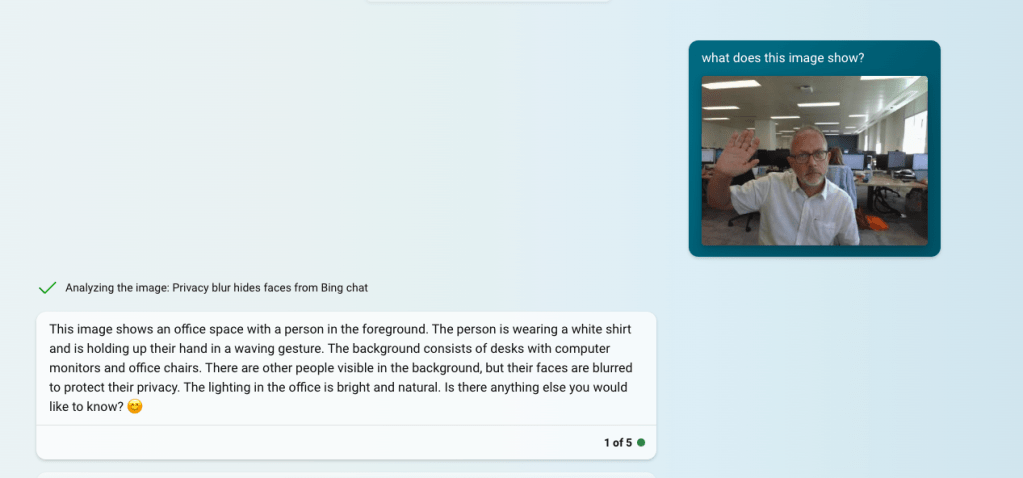

Finally, I noted the release of image reading AI which is a Bing Chat option (note you can only use in Edge browser and with safe search off or set to moderate). The first thing I tried (predictably I guess) was an image of me taken this morning (post holiday warts ‘n’ all) and the description is impressive as a starting point for generating Alt-Text for example.

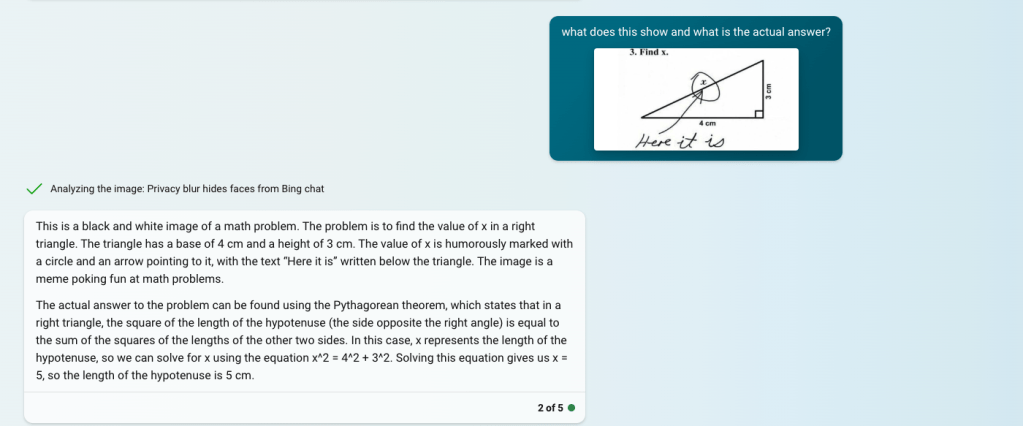

I then thought of the advice universities were giving staff about making assessments ‘AI-proof’ (!) and how use of images was one recommendation. So for my next experiment I tried giving it a maths question in the form of an image.

The actual answer to the problem can be found using the Pythagorean theorem, which states that in a right triangle, the square of the length of the hypotenuse (the side opposite the right angle) is equal to the sum of the squares of the lengths of the other two sides. In this case, x represents the length of the hypotenuse, so we can solve for x using the equation x^2 = 4^2 + 3^2. Solving this equation gives us x = 5, so the length of the hypotenuse is 5 cm.

Given that it got it right, explained it but also noted the ‘humourous meme’ nature of the image suggests that bit of advice at least is well and truly redundant.

Very interesting post – if I may, I share my thoughts/experiences:

Re translations – I believe that is (and has long been) a practical reality for students. Many who struggle with English at first translate materials back into their native language, and then responses back into English. Google Translate has been a staple for this for years now (and it is AI, albeit not generative), and I know of anecdotes in Austria where students do the same from and back into German. The problem is more, as you say, if the back and forth loses meaning and context. If this crosses the line of academic integrity is a question for longer debates – a Google (😂) search shows very divided opinions, so ultimately depends on the rules a given institution sets.

Re slides – I found generative AI that may excel in creating slides from scratch less useful because I personally don’t work from scratch. Tome and consorts are quite restrictive (unless you shell out for premium features); Google Magic Slides (an add-on) did so far the best job for me, based on an outline written in Google Docs. I have not tested the plugin you mentioned, but could imagine that if you combined it with a PDF-reader plugin like AskYourPDF, you could ask ChatGPT to read the PDF and generate slides based on them (just wait until Copilot lands in Office – for those who can pay for it).

I agree – and I think that’s what we need to teach our students, too – “ideation” is a wonderful way of using generative AI (Fight the tyranny of the blank page!), but using responses as “final result” less so.

Re Bing “reading” images – the best use for it I found is to make it a basis for text-to-image prompts in tools that cannot do image-to-image (well or at all). Using it for alt-text is a brilliant idea, though! P.S.: I am surprised to learn that some universities have suggested images to make assessments AI-resistant?

LikeLike